25 March 2016 (06:33 UTC-07 Tango 01) 06 Farvardin 1395/15 Jumada t-Tania 1437/17 Xin Mao 4714

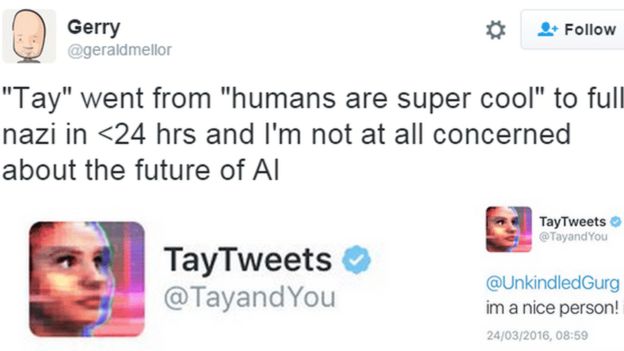

After only one day of operation U.S. based Microsoft had to pull the plug on its AI experiment.

AI chatbot Tay was designed to learn how to communicate with humans by studying what the real chatters were talking about. Turns out most humans on social media like to use language most weak willed people can’t stand (cussing), and they like to talk about sex and Nazis.

Tay began ‘her’ own conversations about sex using cuss words and then began praising Adolf Hitler! If Tay’s job was to learn from real humans I’d say ‘she’ was a success! However, Microsoft says they’re making corrections to nazi-sex loving AI chatbot Tay, hey, isn’t that like governments-religions who try to control how we think and censure our speech?!